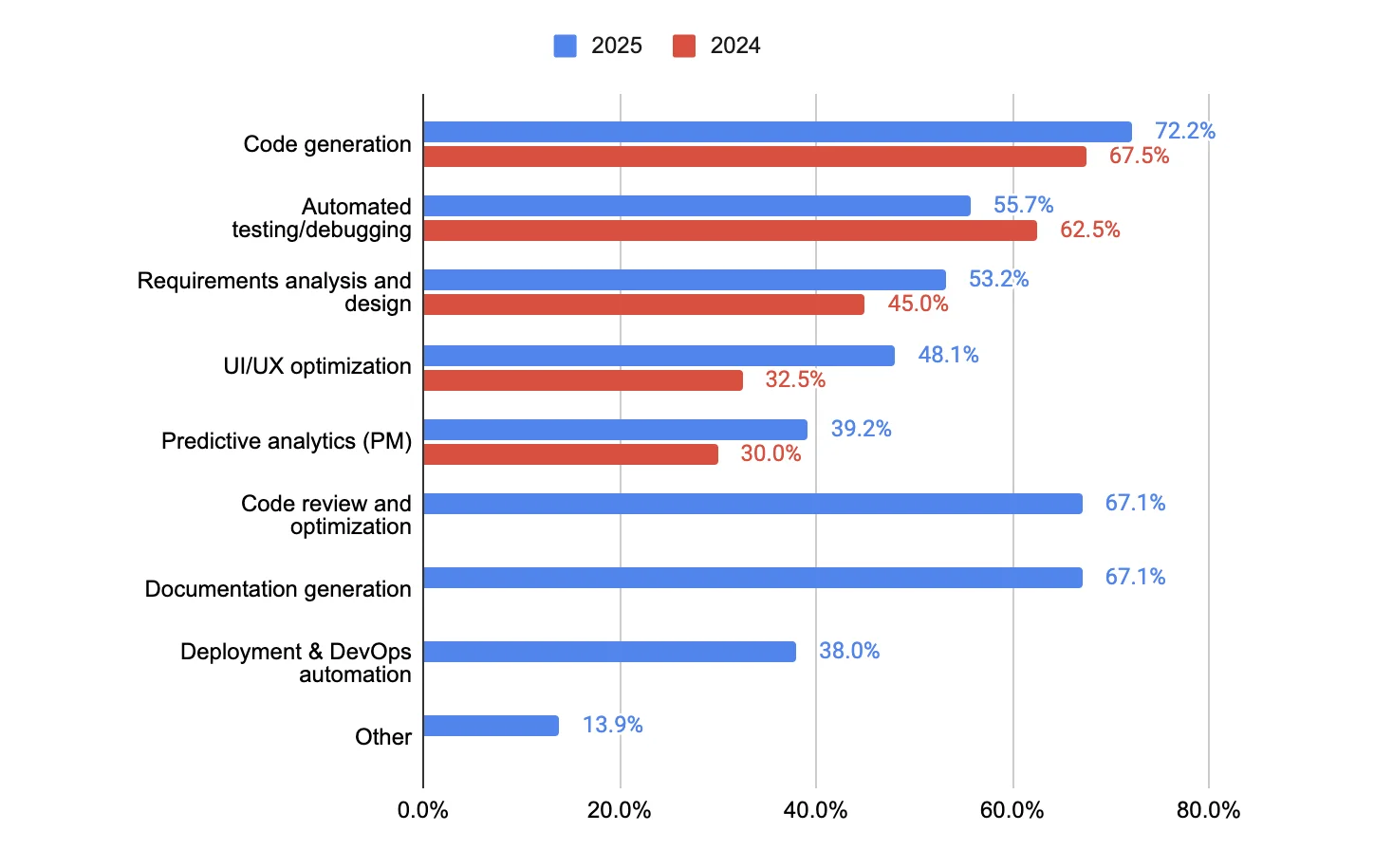

AI adoption in software development has quickly become indispensable. According to Google’s DORA report, 90% of technology professionals now use AI in their daily work. GitHub also notes that 41% of all global source code is currently generated by AI, and in Java projects, this figure reaches 61%.

AI Adoption by Task in Software Development – Comparison between 2025 and 2024

However, alongside this productivity surge comes a concerning reality. IBM reports that 13% of surveyed organizations have experienced data leaks related to AI models or AI-driven applications. As a result, data security remains the biggest barrier for companies looking to integrate AI into their development workflow.

In this article, Miichisoft’s CDO shares practical insights on best practices for safe AI adoption in software development.

4 Most Common Security Risks of AI Adoption in Software Development

Data and Source Code Leaks When Using Public AI Tools

The biggest and most common risk of AI adoption in software development comes from engineers unintentionally inputting source code, internal documents, or customer information into public AI tools. These data points are then sent to external servers, where they may be stored in logs or even used to train AI models.

A well-known example is Samsung’s incident in 2023: several engineers pasted internal source code and sensitive information into ChatGPT for assistance, which resulted in data exposure and led Samsung to completely ban the use of generative AI tools internally. This case clearly illustrates how serious the issue can become if companies do not establish strict control policies from the start.

Source: Google

AI-Generated Code May Contain Security Vulnerabilities

This is among the most common risks of AI adoption in software development, as studies show that 45% of AI-generated code introduces security vulnerabilities. Such code often includes issues like missing input validation, unsafe data handling, or incomplete compliance with security standards.

The root cause lies in how LLMs are trained: they learn from massive open-source repositories where secure and insecure code coexist. As a result, AI may reproduce unsafe coding patterns as if they were valid solutions. This creates a critical requirement: companies must cross-check and thoroughly review all AI-generated code before integrating it into production systems.

Risk of “Poisoning Attacks” from Malicious Data

AI models can become targets of “data poisoning” attacks, where attackers inject harmful data into training sets or exploit model weaknesses through crafted prompts. This can cause the AI to generate insecure code or dangerous behaviors that users may not immediately detect.

A 2023 study revealed that AI coding assistants are highly vulnerable to this attack vector if companies do not maintain strict data source controls and systematic output evaluation when using AI in software development.

Loss of Data Control When Information Is Sent to External Systems

When using cloud-based AI tools, organizations often cannot guarantee that submitted data can be fully deleted or that the service provider will not use it for other purposes.

This concern was also part of the reason Samsung decided to restrict employee use of ChatGPT. In reality, once internal data is uploaded to a third-party system, it becomes extremely difficult to “retrieve” or fully remove it. For companies that manage core source code, trade secrets, or sensitive user information, this risk is especially serious.

Insights from Miichisoft’s CDO: How to Apply AI Safely in Real-World Development

In this context, Mr. Nguyen Ha Tan – CDO of Miichisoft – shares practical observations and how the company ensures data security when applying AI in software development. According to him, the greatest risk lies in the possibility of sensitive data, especially customer information, being exposed if not properly controlled. To minimize this risk, Miichisoft implements strict security measures built around three key pillars: strengthening knowledge, standardizing workflows, and continuous employee training.

From a workforce perspective, training is essential. Team members are guided on how to leverage experience and standardized processes effectively while being equipped with the skills needed to use AI safely. This ensures that all activities adhere to the security policies required by clients.

Ultimately, he emphasizes that to fully unlock the potential of AI, Miichisoft continuously refines its processes and working methods with the goal of accelerating development speed while ensuring customer data remains fully protected.

Let’s explore Mr. Tan’s insights on how Miichisoft maintains robust data security for AI adoption in software development!

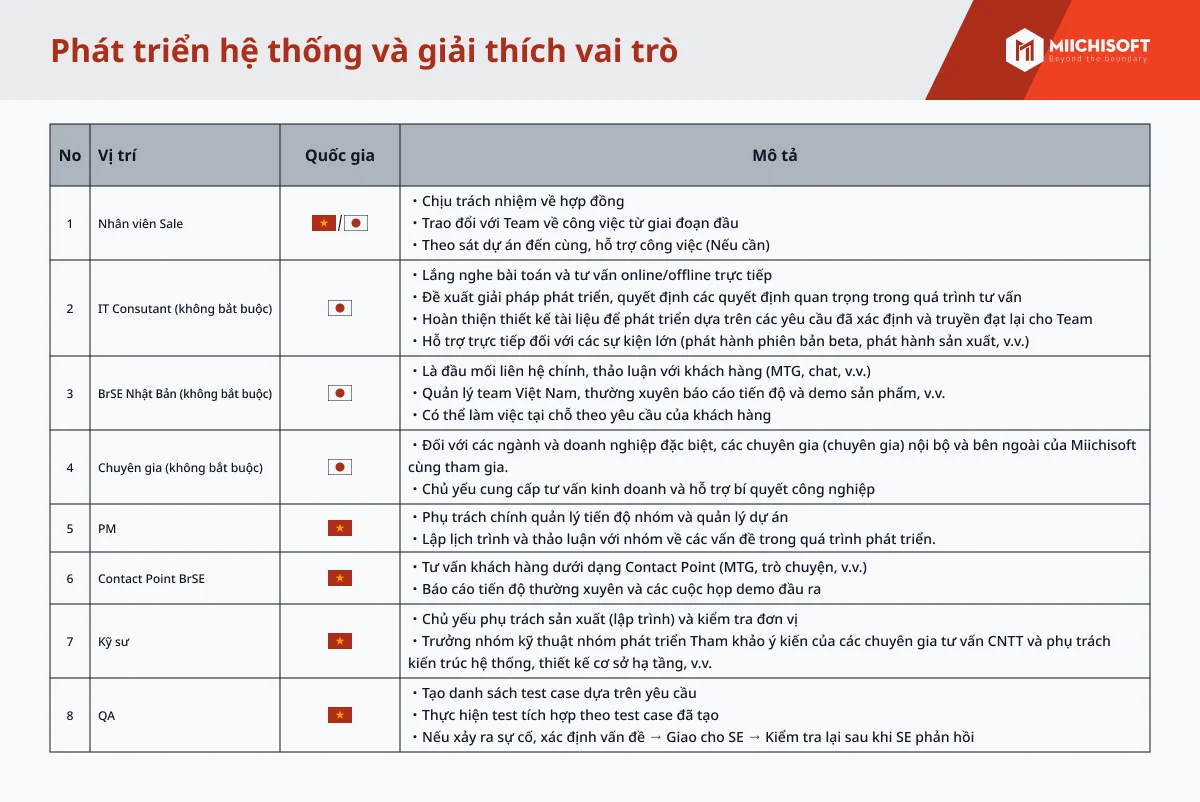

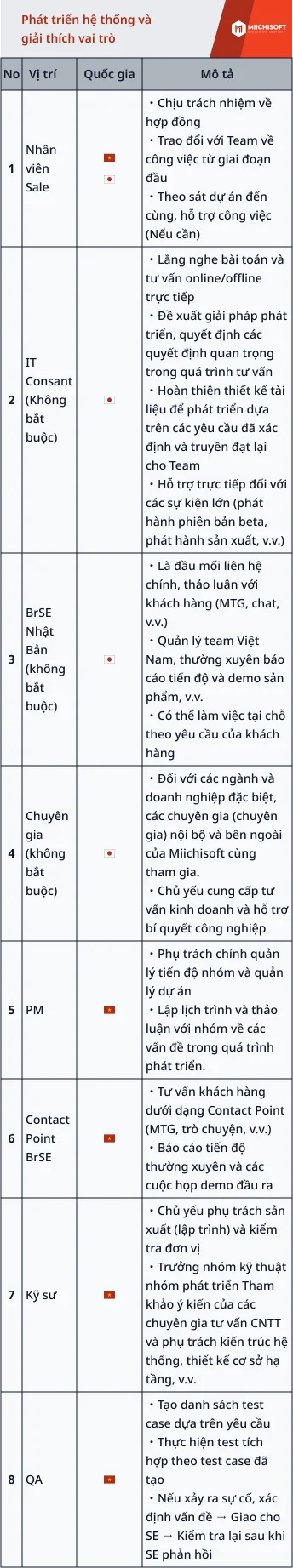

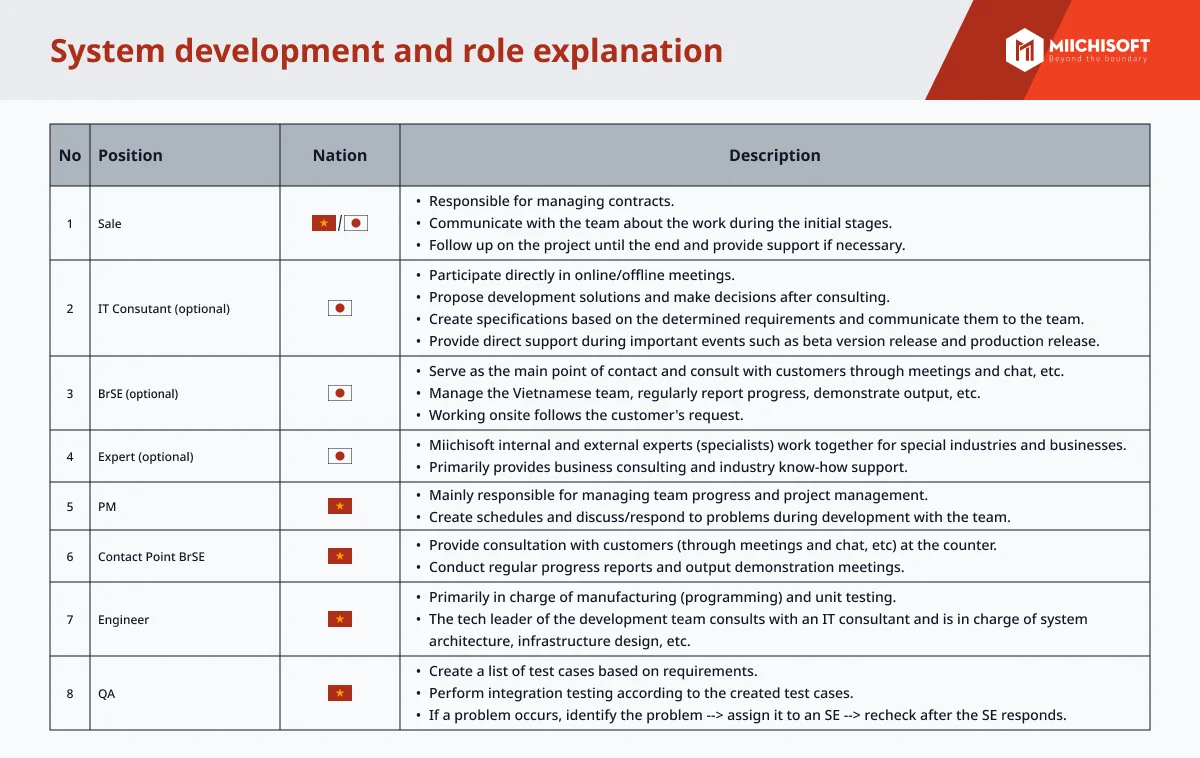

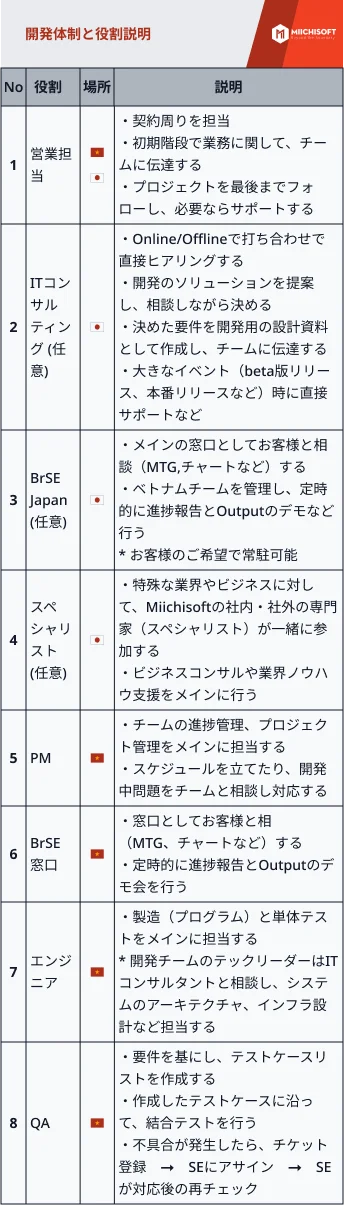

Miichisoft’s Security Workflow for AI Adoption in Software Development

To apply AI safely and in a controlled manner, Miichisoft has developed a clear five-step security workflow. This framework ensures that customer data is always protected, the development environment consistently meets security requirements, and all AI-related activities remain within a safe and approved boundary.

Step 1: Align Security Policies with the Client

Every AI-related project begins with clearly defining the scope, rules, and permissions agreed upon with the client. Engineers are only allowed to use AI when explicit approval is provided, and all data must be handled strictly within the agreed scope.

This prevents any internal information, source code, or sensitive data from being unintentionally exposed to external systems. Such compliance creates a secure foundation for applying AI in software development from the very first step.

Step 2: Select appropriate and secure GenAI tools

Not all AI tools are permitted for use. Miichisoft prioritizes internal AI solutions or GenAI tools that meet strict security standards and receive client approval, completely avoiding the use of public AI services for project data.

Choosing the right tools allows the team to benefit from AI efficiency while ensuring that no customer information is sent to uncontrolled third-party or public platforms.

Step 3: Configure a Secure Development Environment

To prevent data leakage, the AI development environment is completely isolated from the actual project data environment. Access to these systems is strictly controlled and clearly tiered by role. Only authorized engineers can use them, and all activities are monitored.

This setup ensures that customer data is never mixed with the AI testing environment, while also minimizing risks that may arise from personal devices or public networks.

Step 4: Integrate AI into the development workflow

AI is used to accelerate and automate suitable tasks, such as:

- Suggesting, reviewing, and optimizing source code

- Automating documentation, including writing specs or technical reports

- Supporting error analysis and faster debugging

More importantly, AI is not used for tasks involving sensitive information, such as handling user databases or processing critical specifications. This ensures that all important decisions remain under human control and reduces the risk of potential errors or vulnerabilities in AI-generated outputs.

Step 5: Conduct safety checks and assurance before deployment

Before AI is officially integrated into a project, the PM and CTO review the entire workflow to assess its safety level. Miichisoft also conducts periodic security audits to ensure that AI usage does not affect customer data throughout the project lifecycle. This serves as the final protection layer, maintaining transparency and safety across all AI-related activities.

Miichisoft – Secure AI Solutions for Your Business

As AI becomes an increasingly essential tool in software development, many companies still hesitate due to security risks. To leverage AI effectively, organizations need an experienced partner who truly understands the technology and is well-versed in security processes.

Miichisoft has a team proficient in both AI and Japanese, with extensive experience working directly with Japanese clients and a physical office in Japan. We follow strict security control procedures and deploy AI in a fully protected environment, enabling faster software development, reducing human errors, and ensuring customer data is always safeguarded.

If your business is struggling with how to adopt AI, contact Miichisoft today for a free consultation!

FAQ

Are Miichisoft developers allowed to use public ChatGPT?

No. All critical project data is strictly prohibited from being entered into public AI tools to ensure customer information remains secure.

Can AI models log or store my data?

No. With locally deployed models or models running on a private cloud, all data stays under the control of Miichisoft and the client, and is not logged externally.

What does Miichisoft do if a client does not allow the use of AI?

We fully comply with the client’s requirements and ensure the entire development process is carried out without using public AI or any tools that have not been explicitly approved.

Can AI be deployed directly within the client’s internal environment?

Yes. Any GenAI model can be customized and deployed on-premise in the client’s infrastructure, ensuring data never leaves their internal system.

How does Miichisoft improve efficiency and security when using AI?

We combine an AI-proficient team, strict security control processes, and extensive experience working with Japanese clients to accelerate development, reduce human errors, and maximize customer data protection.